Stable Diffusion webui is a web service based on Gradio library for Stable Diffusion. The installation process can automatically set up the environment and install Stable Diffusion, which can save a lot of time. However, it requires an NVIDIA or AMD GPU to install it. If you want to install Stable Diffusion WebUI without a GPU, some modifications are necessary.

Using a CPU to run Stable Diffusion can be painful, so this article is just for fun.

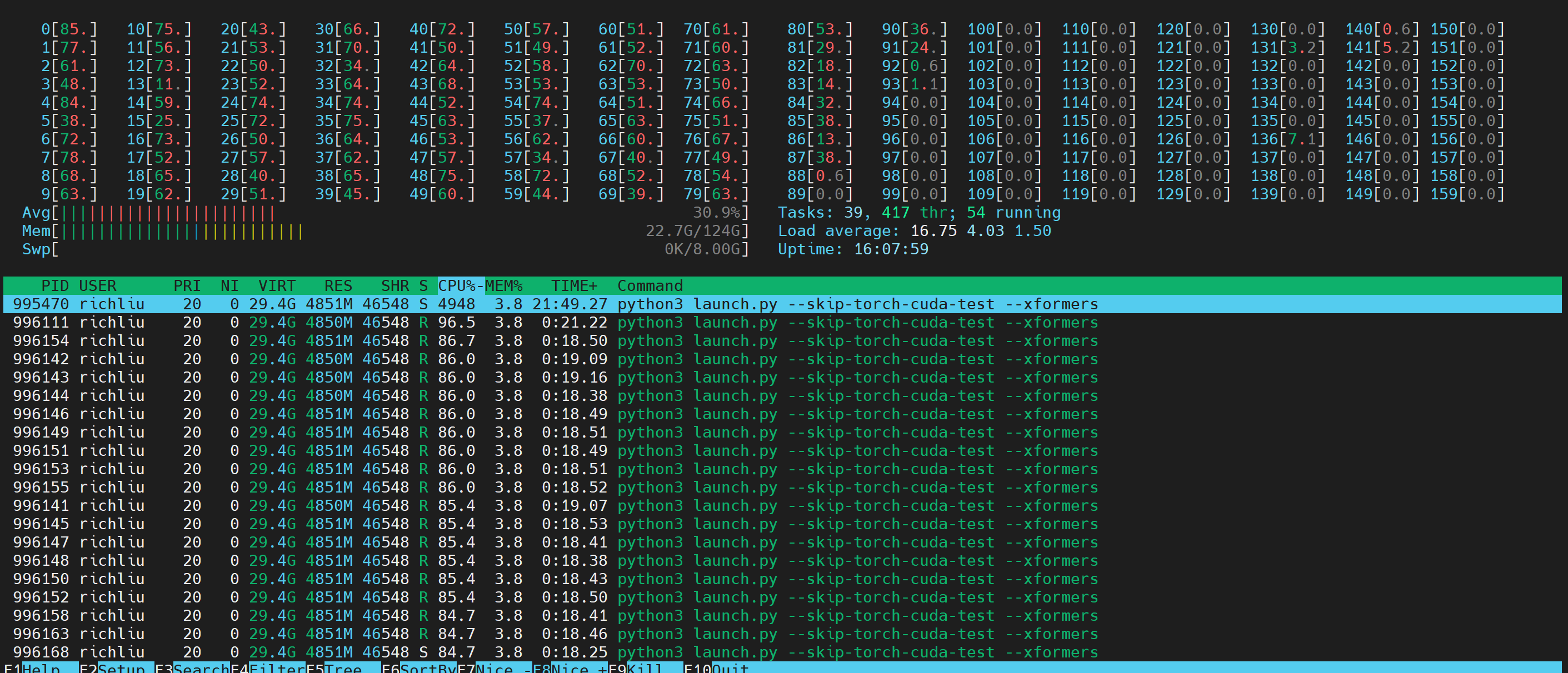

Platform is Ampere Altra Mt. Collins system with 2 Ampere Altra CPU, total 160 cores.

OS: ubuntu 22.04.02 LTS

Seems Stable Diffustion webui needs python 3.10, so, ubuntu 22.04 is good choice.

It appears that Stable Diffusion WebUI requires a user account to run, and should not be run with root privileges.

Install Necessary Packages

Install all necessary packages, especially you install system with minimal system.

sudo apt install wget git python3 python3-venv python3-dev sudo apt install libgl-dev ninja-build g++ build-essential

Download Source Code

git clone https://github.com/AUTOMATIC1111/stable-diffusion-webui.git cd stable-diffusion-webui/

Modify Source Code

It needs to modify Stable Diffusion source code to install CPU version pytorch, not GPU version pytorch.

modify launch.py

--- a/launch.py

+++ b/launch.py

@@ -219,7 +219,8 @@ def run_extensions_installers(settings_file):

def prepare_environment():

global skip_install

- torch_command = os.environ.get('TORCH_COMMAND', "pip install torch==1.13.1+cu117 torchvision==0.14.1+cu117 --extra-index-url https://download.pytorch.org/whl/cu117")

+ torch_command = os.environ.get('TORCH_COMMAND', "pip install torch==1.13.1 torchvision==0.14.1 ")

requirements_file = os.environ.get('REQS_FILE', "requirements_versions.txt")

commandline_args = os.environ.get('COMMANDLINE_ARGS', "")

Modify webui.py, my system cannot create public service with strange behavior.

So it needs to modify source code to let it listen on special ip (note: seems current version already fix this issue, if add “–listen”, it will listen on 0.0.0.0)

--- a/webui.py

+++ b/webui.py

@@ -215,9 +215,11 @@ def webui():

for line in file.readlines():

gradio_auth_creds += [x.strip() for x in line.split(',')]

app, local_url, share_url = shared.demo.launch(

- share=cmd_opts.share,

- server_name=server_name,

+ share=True,

+ server_name="0.0.0.0",

server_port=cmd_opts.port,

ssl_keyfile=cmd_opts.tls_keyfile,

ssl_certfile=cmd_opts.tls_certfile,

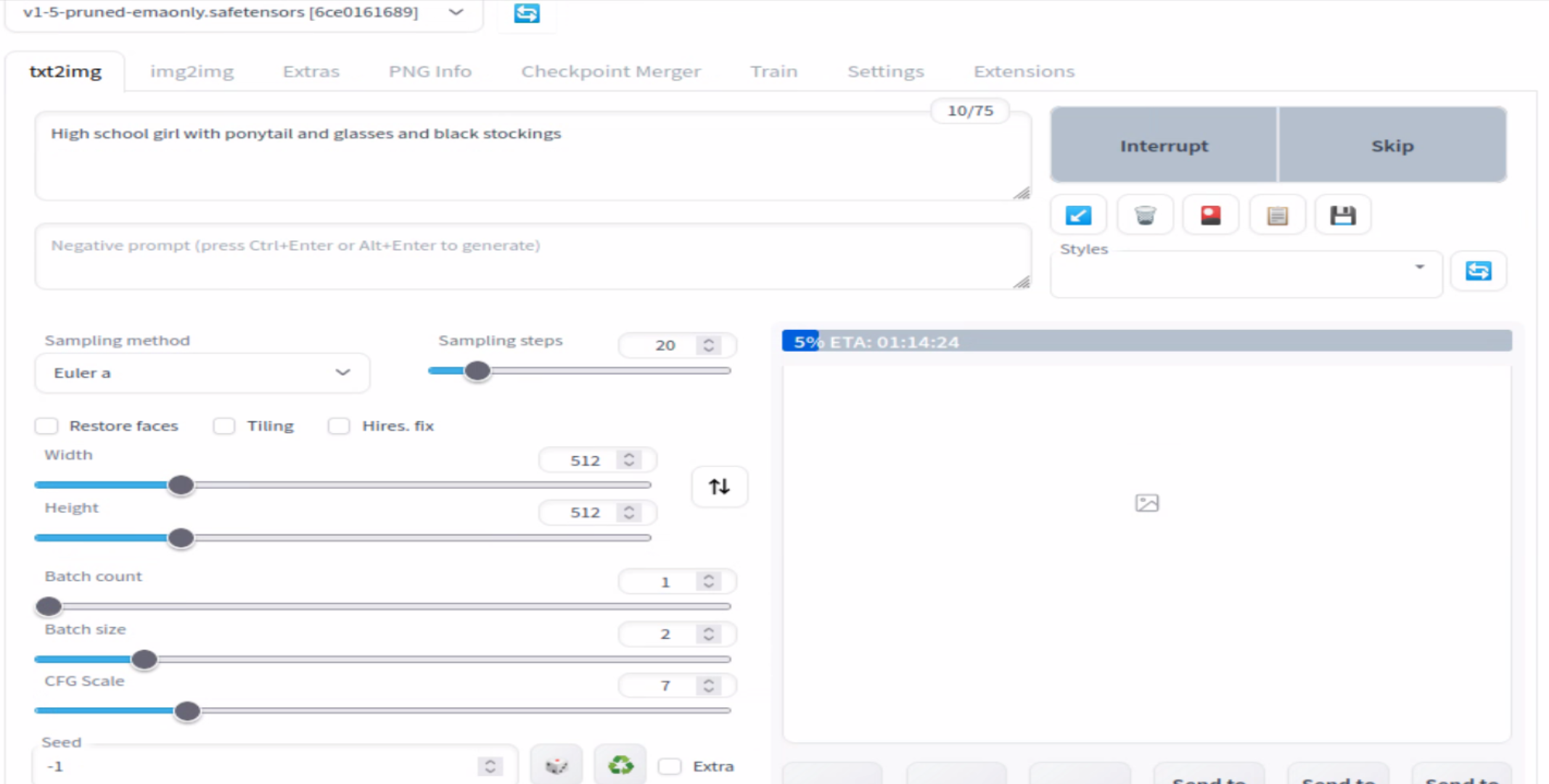

Run

Before run Stable Diffusion, it needs pass some arguments to let Stable Diffustion to use CPU resource.

and it needs –skip-torch-cuda-test to avoid GPU test.

and –xformers module will use GPU, so, don’t use this module.

export CUDA_VISIBLE_DEVICES=-1 export COMMANDLINE_ARGS="--share --listen --enable-insecure-extension-access --use-cpu all --no-half --precision full" bash webui.sh --skip-torch-cuda-test

After run the script, it will download all necessary packages and run it. but seems it cannot use all CPU to rander picture.

Accelerate

seems use export ACCELERATE=True can accelerate performance and use less CPU.

it can configure the accelerate with command

./venv/bin/accelerate config

to configure the accelerate system, here is my configuration .

$ ./venv/bin/accelerate config

In which compute environment are you running? ([0] This machine, [1] AWS (Amazon SageMaker)): 0

Which type of machine are you using? ([0] No distributed training, [1] multi-CPU, [2] multi-GPU, [3] TPU [4] MPS): 1

How many different machines will you use (use more than 1 for multi-node training)? [1]:

How many CPU(s) should be used for distributed training? [1]:160

Do you wish to use FP16 or BF16 (mixed precision)? [NO/fp16/bf16]: bf16

發佈留言